2025

Jintao Lu, He Zhang, Yuting Ye, Takaaki Shiratori, Sebastian Starke, Taku Komura. 2025.

CHOICE: Coordinated Human-Object Interaction in Cluttered Environments for Pick-and-Place Actions.

ACM Transactions on Graphics (TOG).

[

Project

Paper

Video

Video

]

Our hierarchical framework synthesizes full-body motions for object pick-n-place interactions, and generalizes to cluttered environments with real-world complexity.

German Barquero, Nadine Bertsch, Manoj Kumar Marram Reddy, Carlos Chacón, Filippo Arcadu, Ferran Rigual, Nicky (Sijia) He, Cristina Palmero, Sergio Escalera, Yuting Ye, Robin Kips*. 2025.

From Sparse Signal to Smooth Motion: Real-Time Motion Generation with Rolling Prediction Models.

CVPR.

[

Project

Paper

Video

Video

Code

Code

Data Request

Data Request

]

We adapted real-time diffusion model to handle hand tracking failures in VR.

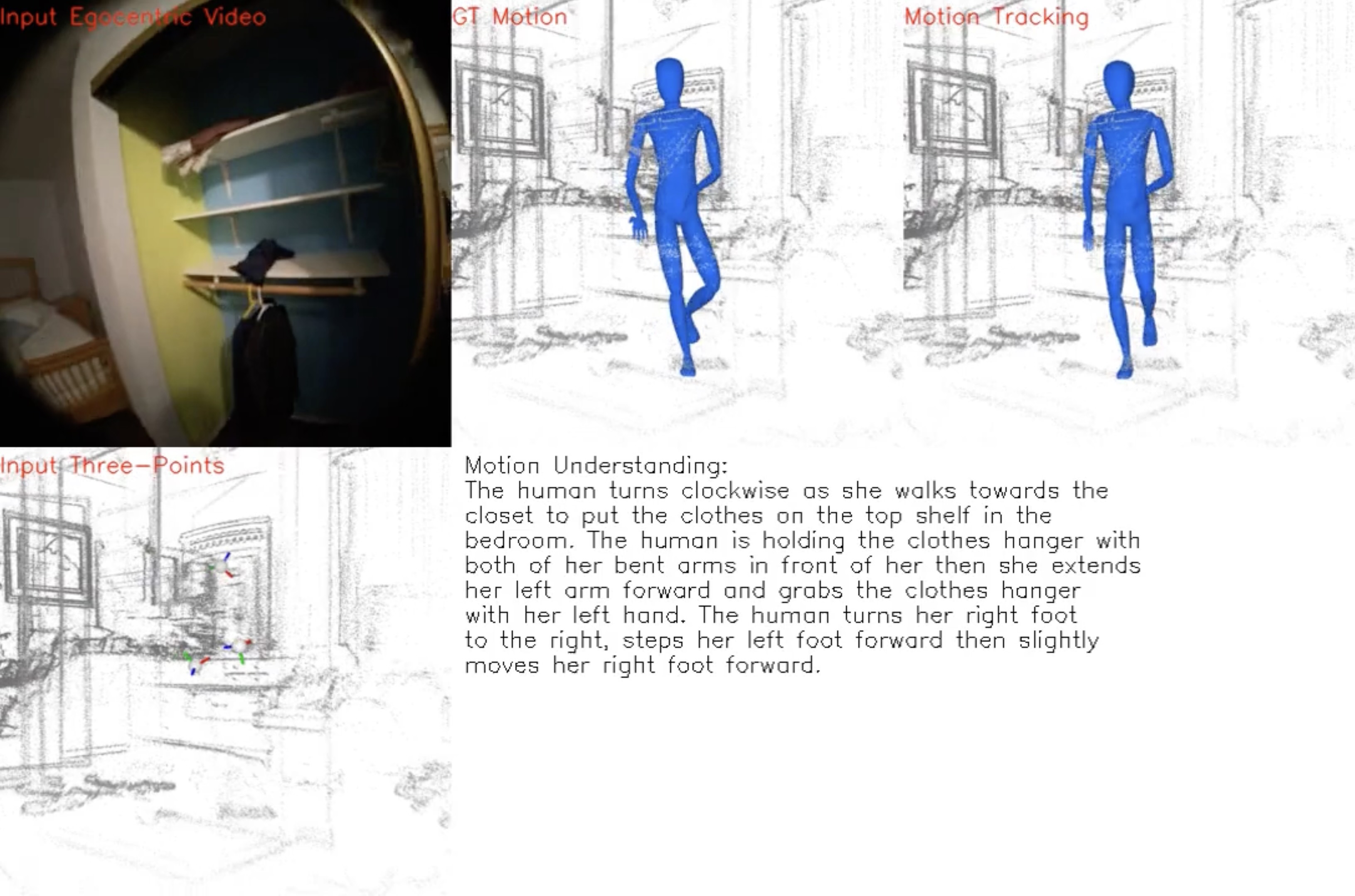

Fangzhou Hong, Vladimir Guzov, Hyo Jin Kim, Yuting Ye, Richard Newcombe, Ziwei Liu*, Lingni Ma*. 2025.

EgoLM: Multi-Modal Language Model of Egocentric Motions.

CVPR Oral.

[

Project

Paper

Video

Video

]

EgoLM is an LLM-based model that jointly learns from egocentric videos, motion sensors, and the corresponding language descriptions from the Nymeria dataset.

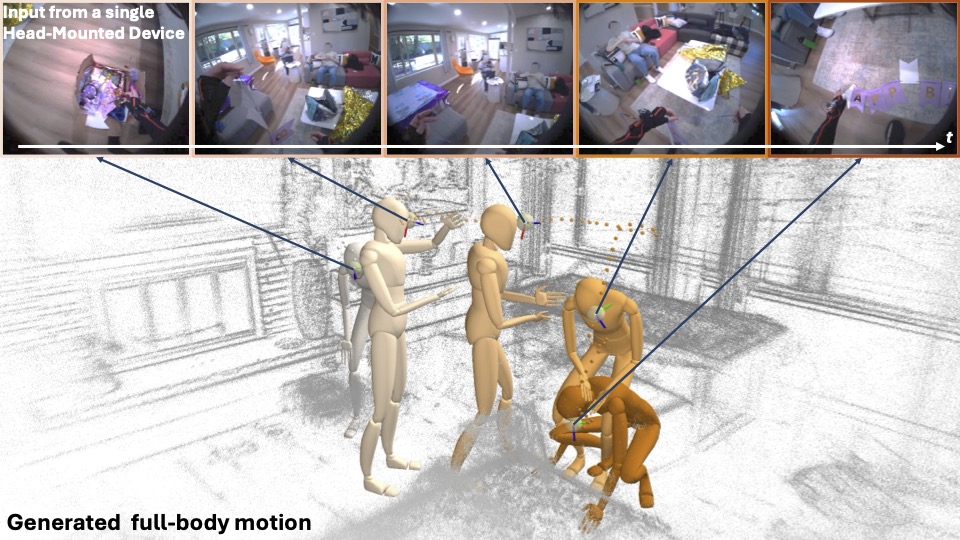

Vladimir Guzov†, Yifeng Jiang†, Fangzhou Hong, Gerard Pons-Moll, Richard Newcombe, C. Karen Liu*, Yuting Ye*, Lingni Ma*. 2025.

HMD^2: Environment-aware Motion Generation from Single Egocentric Head-Mounted Device.

3DV.

[

Project

Paper

Video

Video

]

We reconstruct full body motion solely from signals in the

Aria AR glasses, including SLAM poses, point clouds, and CLIP embeddings from images of the color camera.

2024

Lingni Ma, Yuting Ye, Fangzhou Hong, Vladimir Guzov, Yifeng Jiang, Rowan Postyeni, Luis Pesqueira, Alexander Gamino, Vijay Baiyya, Hyo Jin Kim, Kevin Bailey, David Soriano Fosas, C. Karen Liu, Ziwei Liu, Jakob Engel, Renzo De Nardi, Richard Newcombe. 2024.

Nymeria: A Massive Collection of Multimodal Egocentric Daily Motion in the Wild.

European Conference on Computer Vision (ECCV).

[

Project

Paper

Data and Code

Data and Code

Data Explorer

Data Explorer

]

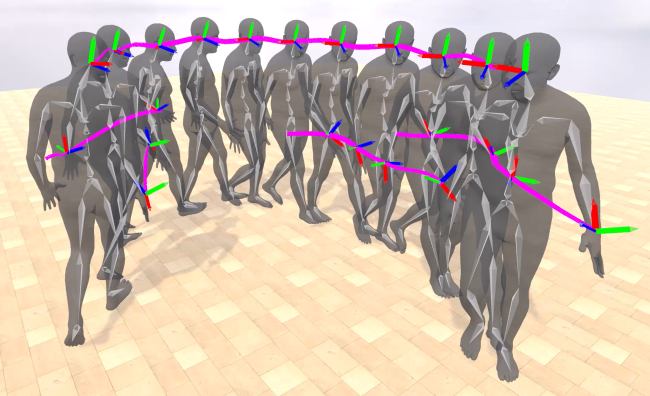

Sebastian Starke, Paul Starke, Nicky He, Taku Komura, Yuting Ye. 2024.

Categorical Codebook Matching for Embodied Character Controllers.

ACM Trans. Graph.

[

Project

Paper

Video

Video

Code

Code

]

We presented an end-to-end system that simultaneously learns a discrete latent codebook of motion and to sample from it based on input control. We showcase various applications in VR of controlling a full body character from sparse inputs.

Peizhuo Li, Sebastian Starke*, Yuting Ye, Olga Sorkine-Hornung*. 2024.

WalkTheDog: Cross-Morphology Motion Alignment via Phase Manifolds.

SIGGRAPH Conference.

[

Project

Paper

Video

Video

Code

Code

Data

Data

]

We achieved unsupervised semantic motion alignment between different skeleton morphologies via a shared and learned latent phase manifold. The key is to parameterize motion segments with a one-dimensional periodic phase and a discrete latent amplitude.

Dongseok Yang, Jiho Kang, Lingni Ma, Joseph Greer, Yuting Ye*, Sung-Hee Lee*. 2024.

DivaTrack: Diverse Motions and Bodies from Acceleration-enhanced Three-point Trackers.

Eurographics (

best paper honorable mention).

[

Project

Paper

Video

Video

Code

Code

Data

Data

]

We incorporated IMUs in the 3-point body tracking problem, and highlighted better results in challenging scenarios of tracking diverse subjects and a wide range of actions.

2023

Yifeng Jiang, Jungdam Won, Yuting Ye*, C. Karen Liu*. 2023.

DROP: Dynamics Responses from Human Motion Prior and Projective Dynamics.

SIGGRAPH Asia conference.

[

Project

Paper

Video

Video

]

We endowed generative motion models with dynamic responses to forces and collision by treating motion prior as one of the energy terms in a projective dynamics simulation.

Deok-Kyeong Jang, Yuting Ye*, Jungdam Won, Sung-Hee Lee*. 2023.

MOCHA: Real-Time Motion Characterization via Context Matching SIGGRAPH Asia conference.

[

Project

Paper

Video

Video

]

We learned a style-agnostic context space with self-supervision for motion style transfer. In real time, we project the encoded input motion to the closest context latent, decode the context latent to a target style, then apply style transfer between input and target.

Mingyi Shi, Sebastian Starke, Yuting Ye, Taku Komura*, Jungdam Won*. 2023.

PhaseMP: Robust 3D Pose Estimation via Phase-conditioned Human Motion Prior.

International Conference on Computer Vision (ICCV).

[

Project

Paper

Video

Video

]

We presented a human motion prior model utilizing learned phase features. Progressing phases monotonically generates plausible poses under severe occlusion or noise.

Daniele Reda, Jungdam Won, Yuting Ye, Michiel van de Panne*, Alexander Winkler*. 2023.

Physics-based Motion Retargeting from Sparse Inputs. SCA

[

Project

Paper

Video

Video

]

We demonstrated a system for a user to puppeteer a bipedal ceature in VR without additional hardware accessories. We simiply replaced the human character with a creature in the QuestSim formula and applied only rudimentary kinematic retargeting.

Yunbo Zhang, Deepak Gopinach, Yuting Ye, Jessica Hodgins, Greg Turk, Jungdam Won*. 2023.

Simulation and Retargeting of Complex Multi-Character Interactions. SIGGRAPH North America Conference

[

Project

Paper

Video

Video

]

We proposed an Interaction Graph representation that can not only simulate multi-character interactions, but also retarget to different proportions or even morphologies.

Sunmin Lee, Sebastian Starke, Yuting Ye, Jungdam Won*, Alexander W. Winkler*. 2023.

QuestEnvSim: Environment-aware Simulated Motion Tracking From Sparse Sensors. SIGGRAPH North America Conference

[

Project

Paper

Video

Video

]

We extended QuestSim to support environment interactions such as sitting on chairs and stepping over obstacles using an egocentric heighmap as an additional policy input.

Alex Adkins, Aline Normoyle, Lorraine Lin, Yun Sun, Yuting Ye, Max Di Luca, Sophie Jörg. 2023.

How important are Detailed Hand Motions for Communication for a Virtual Character Through the Lense of Charades? ACM Trans. Graph. (TOG)

[

Project

Paper

]

We studied how artifacts in detailed hand motions affect the communication for a virtual character using the game of Charades, both in 2D videos and in VR.

Thu Nguyen-Phuoc, Gabriel Schwartz, Yuting Ye, Stephen Lombardi, Lei Xiao. 2023.

AlteredAvatar: Stylizing Dynamic 3D Avatars with Fast Style Adaptation. Arxiv

[

Project

Paper

Video

Video

]

We devised a meta-learning algorithm to quickly adapt a dynamic 3D avatar (Instant Codec Avatar) to novel styles prompted by text, an example image, or both. Our reuslts preserve the subject identity across different expressions and views.

Yunbo Zhang, Alex Clegg, Sehoon Ha, Greg Turk*, Yuting Ye*. 2023.

Learning to Transfer In-Hand Manipulations Using a Greedy Shape Curriculum. Eurographics

[

Project

Paper

Video

Video

]

Our algorithm learns in-hand manipulation of novel objects from a motion capture demonstration. A novel "Greedy Shape Curriculum" is proposed to successfully transfer the input manipulation motion on a simple shape to various complex objects.

Qi Feng, Kun He*, He Wen, Cem Keskin, Yuting Ye. 2023.

Rethinking the Data Annotation Process for Multi-view 3D Pose Estimation with Active Learning and Self-Training. WACV

[

Project

Paper

Code

Code

]

We devised an active learning strategy to reduce manual annotation effort for 3D pose estimation problems using multi-view consistency and self-training with pseudo labels.

2022

Alexander W. Winkler, Jungdam Won, Yuting Ye. 2022.

QuestSim: Human Motion Tracking From Sparse Sensors With Simulated Avatars. SIGGRAPH Asia Conference

[

Project

Paper

Video

Video

]

We apply deep reinforcement learning to control a simulated full body avatar in real time. The avatar is driven only by input signals from a VR headset and two controllers, and it is able to reliably follow the user's motion in a variety of activities.

Yifeng Jiang, Yuting Ye*, Deepak Gopinath, Jungdam Won, Alexander W. Winkler, C. Karen Liu*. 2022.

Transformer Inertial Poser: Real-time Human Motion Reconstruction From Sparse IMUs With Simultaneous Terrain Generation. SIGGRAPH Asia Conference

[

Project

Paper

Video

Video

Code

Code

]

We reconstruct both the human motion and the environment from 6 body-worn IMUs.

2021

Alex Adkins, Lorraine Lin, Aline Normoyle, Ryan Canales, Yuting Ye, Sophie Jörg. 2021.

Evaluating Grasping Visualizations and Control Modes in a VR Game. ACM Transactions on Applied Perception (TAP)

[

Project

Paper

Video

Video

]

We built a VR Escape Room game to study virtual hand-object manipulation. Immersive virtual environment is an important factor in VR perception studies.

He Zhang, Yuting Ye*, Takaaki Shiratori, Taku Komura*. 2021.

ManipNet: Neural Manipulation Synthesis with a Hand-Object Spatial Representation. ACM Trans. Graph. (SIGGRAPH)

[

Project with Code

Paper

Paper

Video

Video

]

We synthesize detailed finger motions for in-hand object manipulation from trajctories of the wrists and the objects, by representing their geometric relationship.

Binbin Xu, Lingni Ma*, Yuting Ye, Tanner Schmidt, Christopher D. Twigg, Steven Lovegrove. 2021.

Identity-Disentangled Neural Deformation Model for Dynamic Meshes. Arxiv: 2109.15299

[

Paper

]

We learn a Deep-SDF like neural deformation model with disentangled identity and shape latent space from unregistered and partial 4D scans for pose and shape estimation.

2020

Yi Zhou, Chenglei Wu*, Zimo Li, Chen Cao, Yuting Ye, Jason Saragih, Hao Li*, Yaser Sheikh. 2020.

Fully Convolutional Mesh Autoencoder using Efficient Spatially Varying Kernels. NeurIPS

[

Project

Paper

Code

]

Mesh convolution is challenging due to irregular local connectivities. We proposed a novel convolution operator with globally shared weights and varying local coefficients.

Sophie Jörg, Yuting Ye, Michael Neff, Franziska Mueller, Victor Zordan. 2020.

Virtual hands in VR: motion capture, synthesis, and perception. SIGGRAPH and SIGGRAPH Asia Courses. [

Course Notes

Presentation

]

In this course, we cover state-of-the-art methods of hand representation and animation in virtual reality. Topics include finger motion capture hardware and algorithms, hand animation with or without physics, and hand perceptions in VR.

Shangchen Han, Beibei Liu, Randi Cabezas, Christopher D Twigg, Peizhao Zhang, Jeff Petkau, Tsz-Ho Yu, Chun-Jung Tai, Muzaffer Akbay, Zheng Wang, Asaf Nitzan, Gang Dong, Yuting Ye, Lingling Tao, Chengde Wan, Robert Wang. 2020.

MEgATrack: monochrome egocentric articulated hand-tracking for virtual reality. SIGGRAPH

[

Project

Paper

Video

Video

]

Hand tracking from monochrome cameras on the standalone Quest VR headsets.

Julian Habekost, Takaaki Shiratori*, Yuting Ye and Taku Komura*. 2020.

Learning 3D Global Human Motion Estimation from Unpaired, Disjoint Datasets. BMVC

[

Project

Paper

Video

]

Natural human motions are constrained to maintain contacts and body size over time. These are useful information to estimate global motions from monocular videos. We show how to learn such prior from a generic motion database independent of the videos.

2019

Yikang Li, Chris Twigg*, Yuting Ye, Lingling Tao, Xiaogang Wang. 2019.

Disentangling Pose from Appearance in Monochrome Hand Images. ICCV Hands Workshop

[

Paper

]

We explored self-supervised learning to disentangle the hand pose from its appearance in monochrome images, and showed improved pose estimation robustness.

Ryan Canales, Aline Normoyle, Yu Sun, Yuting Ye, Massimiliano Di Luca, Sophie Jörg. 2019.

Virtual Grasping Feedback and Virtual Hand Ownership. ACM SAP

[

Project

Paper

Video

Video

]

We experimented with different visual styles for grasping virtual objects in VR. We found that showing the real hand poses with object penetration helps grasping efficiency, but users prefer physically consistent penetration-free poses.

Lorraine Lin, Aline Normoyle, Alexandra Adkins, Yun Sun, Andrew Robb, Yuting Ye, Max Di Luca, Sophie Jörg. 2019.

The Effect of Hand Size and Interaction Modality on the Virtual Hand Illusion. IEEE VR

[

Project

Paper

Video

Video

Code

Code

]

Our VR experiment found that a matching hand size with dextrous finger tracking are more preferrable for most users, but other conditions still provide a fun experience.

Abhronil Sengupta, Yuting Ye*, Robert Wang, Chiao Liu, Kaushik Roy. 2019.

Going Deeper in Spiking Neural Networks: VGG and Residual Architectures. Frontiers in Neuroscience

[

Project

Paper

]

Deep spiking neural networks are difficult to train. We instead convert deep neural networks from the analog domain to the spiking domain, with a small loss in accuracy.

2018

Shangchen Han, Beibei Liu, Robert Wang, Yuting Ye, Chris D. Twigg, Kenrick Kin. 2018.

Online Optical Marker-based Hand Tracking with Deep Labels. ACM Trans. Graph. (SIGGRAPH)

[

Project

Paper

BibTeX

Video

BibTeX

Video

Data

Data

]

We developed a deep-learning based marker labeling alogrithm for online capture of complex and dexterous hand motions, including hand-hand and hand-object interactions.

Jeongseok Lee, Michael X. Grey, Sehoon Ha, Tobias Kunz, Sumit Jain, Yuting Ye, Siddhartha S. Srinivasa, Mike Stilman, C. Karen Liu. 2018.

DART: Dynamic Animation and Robotics Toolkit. The Journal of Open Source Software

[

Project  Paper

BibTeX

Paper

BibTeX

]

2015

Daniel Zimmermann, Stelian Coros, Yuting Ye, Bob Sumner, Markus Gross. 2015.

Hierarchical Planning and Control for Complex Motor Tasks. SCA '15 Proceedings of the ACM SIGGRAPH/Eurographics Symposuim on Computer Animation

[

Project

Paper

BibTeX

Video

BibTeX

Video

]

We presented a planning and control framework that utilizes a hierarchy of dynamic models with increasing complexity and accuracy for complex tasks.

Stéphane Grabli, Kevin Sprout, Yuting Ye. 2015.

Feature-Based Texture Stretch Compensation for 3D Meshes. ACM SIGGRAPH Talks.

[

Paper

BibTeX

BibTeX

]

Stretching of rigid features on deforming skins usually betrays the synthetic nature of a CG creature. Our method mitigates such effects by re-parameterizing the texture coordinates to compensate for unwanted deformations on the 3D mesh.

Rachel Rose, Yuting Ye. 2015.

Multi-resolution Geometric Transfer for Jurassic World. ACM SIGGRAPH Talks.

[

Paper

BibTeX

BibTeX

]

We developed an easy-to-use workflow for artists to transfer geometric data between different mesh resolutions. Our tool enables efficient development of highly detailed creature assets such as the dinosaurs in "Jurassic World".

2013

Hao Li, Jihun Yu, Yuting Ye, Chris Bregler. 2013.

Realtime Facial Animation with On-the-fly Correctives. ACM Trans. Graph. (SIGGRAPH)

[

Project

Paper

BibTeX

Video

BibTeX

Video

]

We developed a realtime facial tracking and retargeting system using an RGBD sensor. Our system produces accurate tracking results by continuously adapting to user specific expressions on-the-fly.

Kiran Bhat, Rony Goldenthal, Yuting Ye, Ronald Mallet, Michael Koperwas. 2013.

High Fidelity Facial Animation Capturing and Retargeting With Contours. SCA '13 Proceedings of the ACM SIGGRAPH/Eurographics Symposuim on Computer Animation.

[

Paper

BibTeX

Video

BibTeX

Video

]

We developed a facial animation tracking system that utilizes eyelid and lip sillouettes for high fidelity results.

2012 and before

Sehoon Ha, Yuting Ye, C. Karen Liu. 2012.

Falling and Landing Motion Control for Character Animation. ACM Trans. Graph. (SIGGRAPH Asia)

[

Paper

BibTeX

Video

BibTeX

Video

]

We developed a general controller that allows a character to fall from a wide range of heights and initial velocities, continuously roll on the ground, and get back on feet.

Yuting Ye, C. Karen Liu. 2012.

Synthesis of Detailed Hand Manipulations Using Contact Sampling. ACM Trans. Graph. (SIGGRAPH)

[

Project

Paper

BibTeX

Video

BibTeX

Video

]

This work synthesizes detailed and physically plausible hand-object manipulations from captured motions of the full-body and objects. By sampling contact points between the hand and the object, we can efficiently discover complex finger gaits.

Yuting Ye, C. Karen Liu. 2010.

Optimal Feedback Control for Character Animation Using an Abstract Model. ACM Trans. Graph. (SIGGRAPH)

[

Project

Paper

BibTeX

Video

BibTeX

Video

]

We presented an optimal feedback controller for a virtual character to follow a reference motion under physical perturbations and changes in the environment by replanning long-term goals and adjusting the motion timing on-the-fly.

Yuting Ye, C. Karen Liu. 2010.

Synthesis of Responsive Motion Using a Dynamic Model. Computer Graphics Forum (Eurographics)

[

Project

Paper

BibTeX

Video

BibTeX

Video

]

We presented a nonlinear dimensionality reduction model for learning responsive behaviors from very few motion capture examples. Our model can synthesize physically plausible motions of a character responding to unexpected perturbations.

Sumit Jain, Yuting Ye, C. Karen Liu. 2009.

Optimization-Based Interactive Motion Synthesis. ACM Trans. Graph. (TOG)

[

Project

Paper

BibTeX

Video

BibTeX

Video

]

We presented a physics-based approach to synthesizing motions of a responsive virtual character in a dynamically varying environment. A constrained optimization problem that encodes high-level kinematic control strategies is solved at every time step.

Yuting Ye, C. Karen Liu. 2008.

Animating Responsive Characters with Dynamic Constraints in Near-Unactuated Coordinates. ACM Trans. Graph. (SIGGRAPH Asia)

[

Project

Paper

BibTeX

Video

BibTeX

Video

]

We developed a novel algorithm to animate physically responsive virtual characters by combining kinematic pose control with dynamic constraints in the joint actuation space.

Sumit Jain, Yuting Ye, C. Karen Liu. 2007.

Optimization-based Interactive Motion Synthesis for Virtual Characters. ACM SIGGRAPH Sketches and Posters. (Third place in Student Research Competition)

[

Sketch

Poster

Poster

BibTeX

Video

BibTeX

Video

]